Why should you consider SEO in a redesign?

In short, because you have a lot to lose. Let’s say your website’s ranking really well. Rankings are strong, organic traffic is flowing and revenue is growing. Do you really want to undo all that hard and expensive work?

However, by thinking strategically, you can take the opportunity to improve a site’s performance after a redesign.

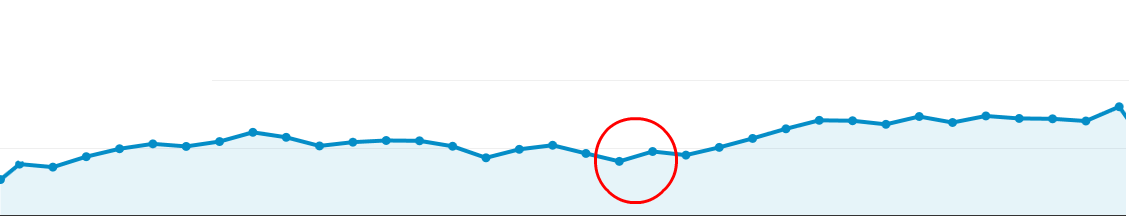

This diagram shows a steady increase in traffic followed (from the red circle) even during the re-indexing phase. If you do a redesign correclty, you won’t lose any traffic or rankings; in fact, you’ll gain them.

Below is some tips that can help you understand the test site being built and your current site from an SEO point of view. This is ciritcal when changing your website around.

Tip 1 – Think about your SEO

First thing is to think about SEO. Very often clients don’t stop to consider the impact SEO has changing their website. They chuck away valuable content from historical pages or completely change every single URL without redirecting the old ones.

This happens because they misunderstand how Google reads a website and how URLs hold credibility. It’s no fault of their own, it happens.

Tip 2 – Crawling the existing site

You should know what your site’s structure looks like, you will fail if you don’t. Grabbing it’s meta data and URLs is critical to identifying exactly what is changing and why.

How do I do that?

Your SEO crawl will give you a road map of what your site is currently set out. The best way to do this is to use a tool like Screaming Frog. Once you have the current site’s meta data and structure, you will know how to match with the new site.

Tip 3 – Auditing the old site

Free tools like Woorank will do, but we advise you to get your hands dirty so to speak, and manually do it yourself. There’s nothing like getting into the nitty gritty of your site to find any problems.

Why audit the site?

You need to know what search engines like and don’t like about your site. This helps you recognise any problems, but also enables you to see which areas must be retained.

What am I looking for?

Here are some tips to check. Using Screaming Frog, I advise checking the following:

- Duplicate page titles

- Missing H1 tags

- Duplicate H1 tags

- Multiple H1 tags

- Missing meta descriptions

- Missing page titles

- Duplicate meta descriptions

- Canonical tags

- Canonicalisation

- Broken internal/external links

- Image alt text

You should also be checking for:

- Robots.txt

- Site speed and performance using Google’s PageSpeed Tools

- Duplicate content (do exact match search “insert content” or use Copyscape)

- Pages indexed by Google (do a site: command in Google)

- Site speed and performance (here’s a tool to check)

- URL structure

- XML sitemaps

- Pages indexed by Google

Tip 4 – Noindex you’re test site

If you’re working on your test site, you do not want Google to index it. If you have added new content, it will get indexed. So when the new site is ready to launch, the new content will have no value because it has been duplicated because of the index while you were working on it.

A site can be noindexed in two ways by your web developer.

If you have WordPress you can simply check the box that says: “Discourage search engines from indexing this site.” under the Settings tab.

This adds the following code in the <head> of every page:

You have a second option which is to block the site in the Robots.txt file. This is a little tricky however; which is why most CMS have a box-ticking option which is easier.

If your CMS doesn’t allow for this, you can put the following in your Robots.txt file:

User-agent: *

Disallow: /

Tip 5 – Crawling the test site

You need to understand how your test site will be structured. Using a site crawler crawl the test site again to see how it looks in comparison to your current site.

What do to:

- Open the first crawl of your current site and make a copy. Click “Save+As” and name the file for example “Current Site Crawl for Editing”. This will be your editable copy.

- Then crawl the test site. Export the test site crawl and save this one as “Test Site Crawl”. Make a copy and name it “Test Site Crawl for Editing”— this is the one we’re going to use.

- Take the new created old site crawl (Current Site Crawl for Editing) and do a find and replace on all the URLs in a program like Excel. Then replace your domain name: “domain.com” with your test server’s domain: “test.domain.com”.

- Select all the URLs and copy them into a txt file. Save this one as the “Testing Crawl for Screaming Frog”. You should have the following files:

- Current Site Crawl(xls)

- Current Site Crawl for Editing (xls)

- Test Site Crawl(xls)

- Test Site Crawl for Editing(xls)

- Testing Crawl for Screaming Frog (txt)

- Using Screaming Frog, find the Mode in the menu bar and select List. The system will change, and you’ll be able to upload a .txt file.

- Locate your txt file (Testing Crawl for Screaming Frog) of all the URLs you just changed and load that into Screaming Frog. Then hit Start.

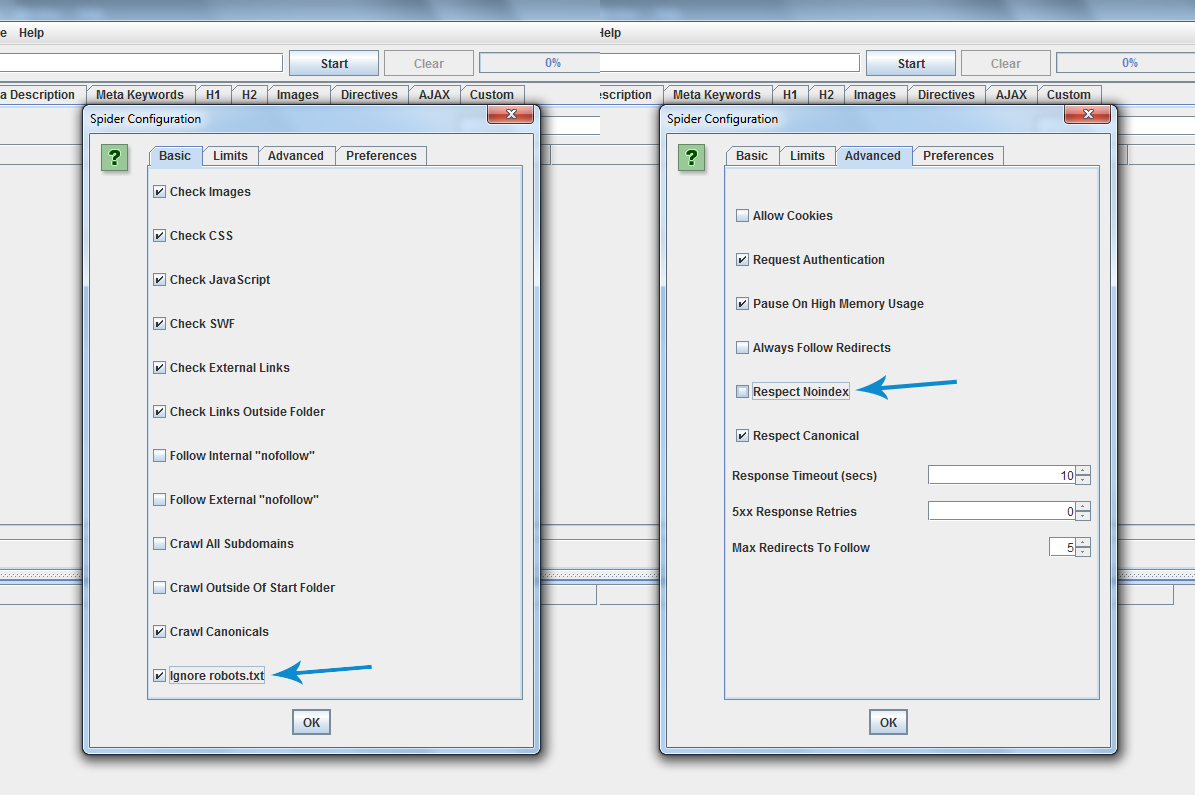

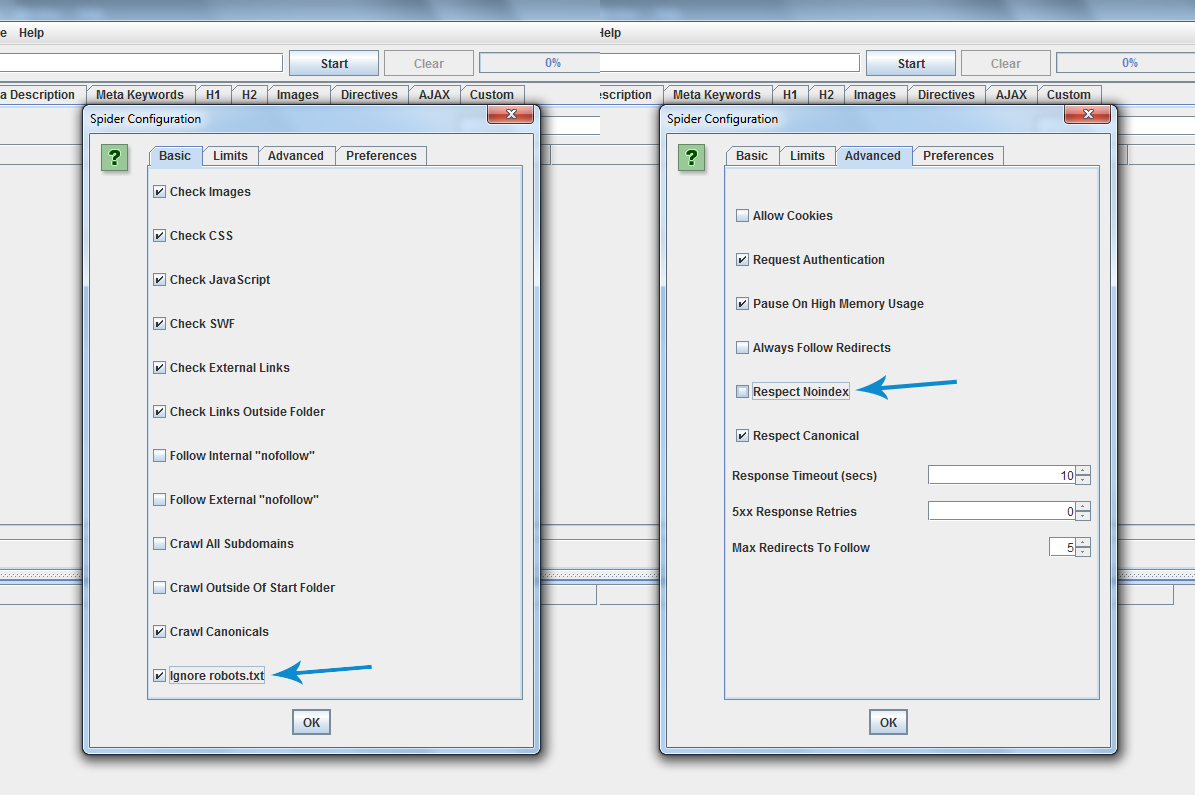

- If followed correctly, you will end up with all the URLs being crawled. If it didn’t, you have to go back and make sure you didn’t miss anything. You will need to allow the crawler to crawl blocked/noindexed URLs. Click Configuration and Spider. Then you’ll find a tick box that says Ignore robots.txt. You may need to tick this. On the same part in the tab called Advanced, you’ll see Respect Noindex; you may need to un-tick this, too. Have a look below at the example.

Now download all the HTML files and save it as an Excel file. Name it “Final Crawled Test Site”. This can be the test crawl you’ll check later. But also, hold onto the very first crawl we did of the test site (Test Site Crawl).

You’ll have the following docs:

- Current Site Crawl(xls)

- Current Site Crawl for Editing (xls)

- Test Site Crawl(xls)

- Test Site Crawl for Editing(xls)

- Testing Crawl for Screaming Frog (txt)

- Final Crawled Test Site (xls)

Now you have the data in Excel format, and you can see what works on the test site. This allows you to understand what’s missing from the test site that is on the current site.

Tip 6 – 404 time

If your’e pages have a 404 error, it means that the page doesn’t exist anymore. So we’ll need to do one of two things:

- Create this URL on the test server.

- Redirect the old URL to the test server’s new URL.

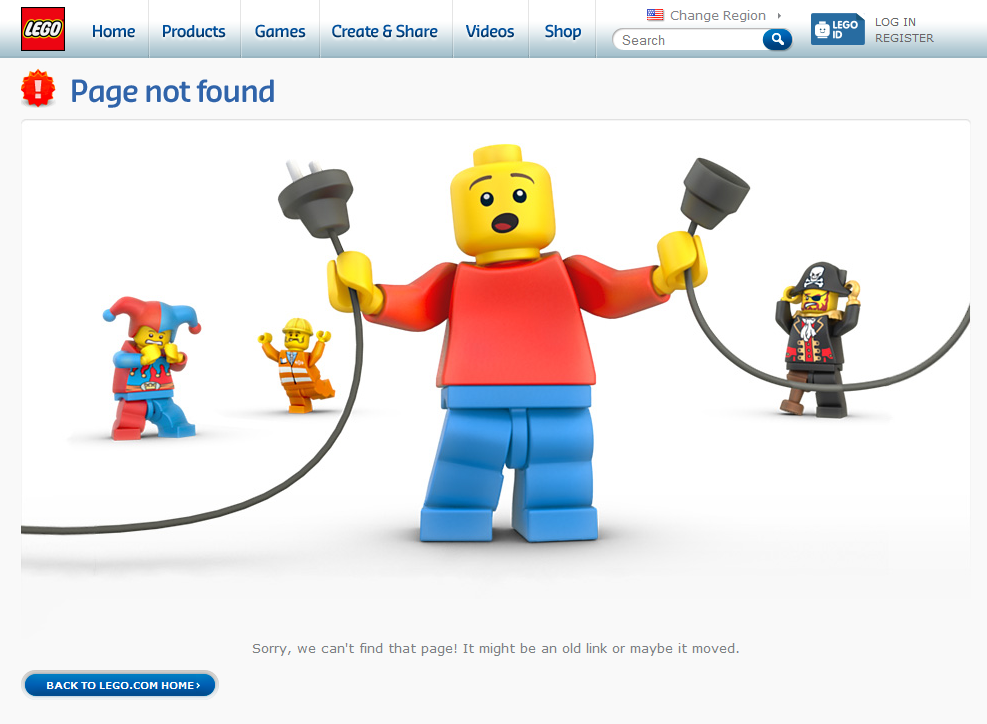

Here’s an example of a 404:

What do to with a URL that isn’t on your current site?

Like with any page on your website, it has to be optimised correctly.

When you redirect pages to a new site you will lose around 10%-30% of your link equity. But you’re giving search engines the best opportunity to bring over your old site’s strong reputation.

Tip 7 – Checking all the additional checks

Rank check

A rank check measures how you’re site performs for a host of keywords in the search engines. You can use this data as a comparison for the new site. If things change, you can react and identify the problems when you check the results.

This is what to look out for:

If a keyword jumps from page 1 to page 10, you could have a problem. Look out for any big or unusual movements by checking the following:

- Did the URL change?

- Did you change any of the meta data?

- Has the page lost all of it’s content?

- Is there a redirect in place?

- Does it have a noindex tag in place?

Content

Don’t delete anything you don’t need to. You might think your old blog posts are old and useless, but they are all adding to the credibility of your site. Without these, you’ll lose a chunk of SEO value.

Google Analytics code

Make sure you place your Google analytics code back in the <head> section of you’re site. It’s really important to check the e-commerce tracking and goals if you currently have those in place.

Unblocking the site

It’s time to check the new site to see if it’s allowing search engines to index it. Just do the reverse of blocking the site to what you did before . Whichever method you used to block it, just do the reverse. If you don’t do this it will create big problems.

In summary checklist

Here is a checklist to use that will help you run through it again.

Think about your SEO from the start of the website

Think about your SEO from the start of the website

Crawl the current site

Crawl the current site

Audit your existing site

Audit your existing site

Stop the test site from being indexed

Stop the test site from being indexed

Crawl the test site

Crawl the test site

Find and replace URLs

Find and replace URLs

Check 404s on test site

Check 404s on test site

Optimise all new pages

Optimise all new pages